What’s the best day of the week to send an email? Will a blue button or a red button get more clicks? Which call-to-action is more likely to convert to a sale?

You know you’ve asked yourself these questions before, as every eager entrepreneur has. Maybe you’ve even taken to Google in the hopes of finding an answer. But every data-driven marketer will end up telling you the same thing: “It depends.”

Not a very helpful answer, huh? But there is one way to know with certainty what the right answer is to all of those questions you have about your marketing: email split testing. That’s right, you’ve got to think like a scientist. And fortunately, you don’t need a PhD to do it. Here’s how.

What Is Email Split Testing? Why Should You Do It?

Split testing, also known as A/B testing, involves running experiments and using the results to make better decisions about your marketing. You should split test your emails to get a definitive, empirical answer to your burning questions about what’s working and what’s not working in your email marketing. Instead of relying on hunches, split testing lets you rely on data (and the numbers don’t lie).

Email split testing can help you get:

- Improved open rates, click-through rates, and conversion rates

- More informed marketing so you don’t waste your time

- Better quality content more suited to your audience

- More money for your business

How to Conduct Email Split Testing by Recalling the Good Old Scientific Method

To prepare for email campaign testing, we’re going to journey back in time to grade school. Remember your science class, when the teacher made you learn the steps of the scientific method before you were allowed to put colorful liquids in test tubes? That’s basically what effective email split testing is—it’s experimentation, just as though you were running a science experiment in a lab.

Need a refresher? Put on your goggles, and let’s dive in!

Step 1: Ask a Question

Before you begin A/B testing, you need to decide why you are doing it. What are you trying to learn from this split test? Maybe you want to know the best time of day to email your subscribers, whether to use emojis in your subject line, or how to increase click-through rates. Whatever it is, you need to decide on what you hope to learn and pose it as a question.

Examples include:

- What time of day is most likely to result in the most opens?

- Does the color of the CTA button make a difference in clicks?

- Will using emojis in the subject line increase open rate?

- Will conversions increase if we include case studies directly within the email?

- Will a plain-text email result in a higher click-through rate than a graphics-heavy email?

Once you’ve got your question, you need to ask another: What kind of results will you consider successful? You need to decide on which metrics you’ll analyze and what types of results you’re hoping to find in a winning email test.

Some metrics you might measure include:

- Open rate

- Click-through rate

- Conversion rate

From there, though, you still need to decide: What’s a good open rate? What’s a good click-through rate? And what’s a good conversion rate? The answer to all of those questions, like so many in marketing, is “it depends.” It depends on what your business’s goals are.

If you’re struggling to decide, you could simply aim to improve upon what you already have, your baseline. So maybe your goal is just to get a higher open rate than your average open rate, or even the highest open rate ever.

You could also consider your sales goals. If you know there’s a specific revenue number you want to hit, and you’re relying on your email marketing to help you get there, then look at your conversion rate as the ultimate sign of if your split testing went well.

Let’s say you want your email marketing to result in $10,000 in sales this quarter. During these three months, you plan to send six sales emails to your list of approximately 5,000 subscribers. Your average order value for your products is $100. Knowing that you can work backward to come to your goal conversion rate for your emails:

10,000 = (5,000*X)(100) where X is the conversion rate

And in this example, after crunching the numbers, you’d find that to hit your revenue goal of $10,000 this quarter, your emails need to hit a conversion rate of 2 percent. Now you know what to aim for in your split testing.

Step 2: Form a Hypothesis

Chances are, you’ve already got a hunch for how things will turn out in your A/B test. So once you’ve got your question, try to answer it using the information you already have. This is called forming your hypothesis.

Examples include:

- Sending on Tuesday will have more opens than sending on Saturday.

- A red CTA button will result in more clicks than a blue one.

- Using emojis in the subject line will increase open rate.

- Including case studies in the email will result in higher conversions.

- A plain-text email will result in higher click-throughs than a graphics-heavy one.

One important note here—your hypothesis must be something that, if it proves true, you can replicate with some reliability. This is one pitfall in split testing, where a tester may want to try out a new style or tone that differs in a nebulous way that’s hard to recreate. But unless it’s something you can rigorously and definitively roll out, it’s not a very useful hypothesis.

Now that you’ve got your hypothesis, it’s time to test it to see if it holds true.

Step 3: Pick 1 Independent Variable

Similar to the warning above, this is where most overeager marketers go wrong. If you want to land on valid results, you must pick only one independent variable (also known as a variate or variant). You can have several variations of the independent variable, but you should have only one independent variable per test.

Why? Because if you change two variables in a single test, you’ll have no way of knowing which variable was responsible for the results.

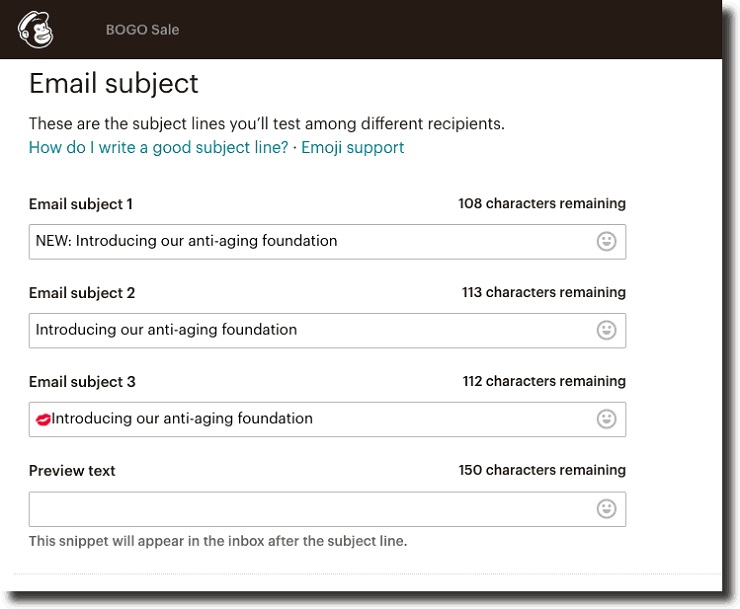

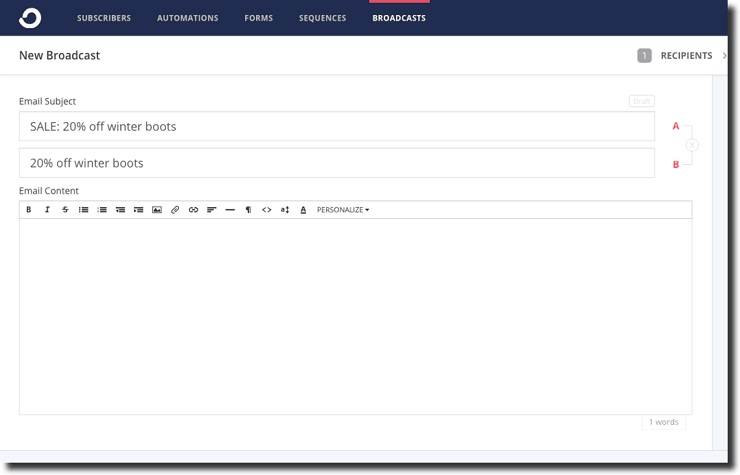

For example, you could select the subject line as your independent variable. But you could run three subject line variations during a single A/B test and see which one wins.

Here are some email marketing elements you might choose to test one at a time:

- Subject line

- Sender’s name

- Preview text

- Call to action

- Body copy

- Image

- Button color

- Discount offer wording

- Time of day to send

- Day of week to send

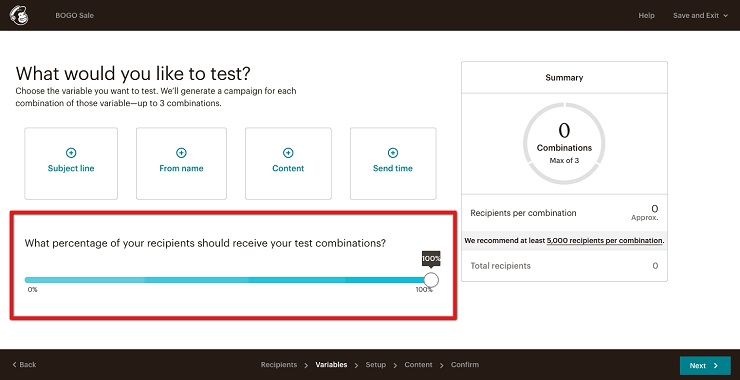

In the simplest A/B test, you’ll send only two different emails (hence the “A/B” part of the term). Your email marketing service might limit the number of variations you can send too; Mailchimp caps it at three, for example, and ConvertKit caps it at two.

Step 4: Gather a Large Enough Sample Size

It doesn’t make sense to run an A/B test on an email list of 100 people. That sample size is too small to be statistically significant. So what’s a good sample size? According to Mailchimp:

“Send combinations to at least 5,000 subscribed contacts to get the most useful data from your test.”

That means in a split test with two variations, Mailchimp recommends you have at least 10,000 subscribers. OK, great…but what if you don’t have that many yet? You can still run split testing campaigns anyway. But I’d recommend running multiple tests so you can try to validate the results by ensuring you repeatedly get the same kinds of outcomes. This can reduce the chances that the results were just a fluke.

Also, if you’re not at 10,000 subscribers yet, it’s a great time to focus on ways to grow your email list while you’re running split testing.

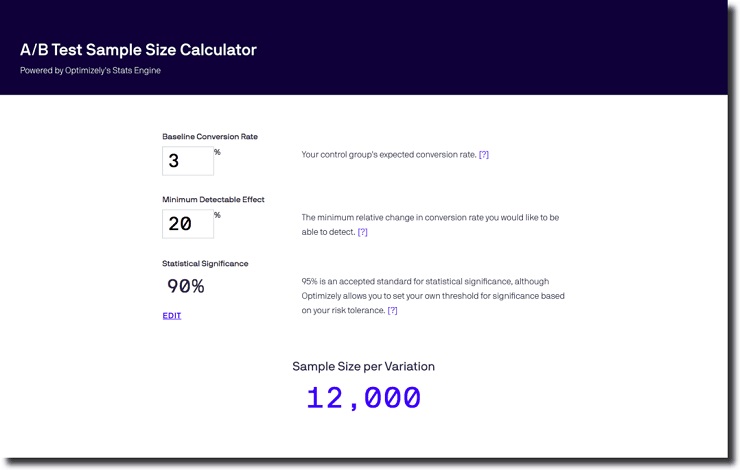

If you’re a true statistician at heart, check out Optimizely’s free sample size calculator which tells you your ideal sample size based on your baseline conversion rate, minimum detectable effect, and desired statistical significance.

Step 5: Run the Test

There are two ways your email marketing software will likely approach A/B testing.

- Send the test to a small portion of your list now, and whichever version wins will be sent to the remainder of your list later.

- Send the test to 100% of your list now, and whichever test wins will inform your next email marketing decision.

Either way is perfectly fine, but just realize that with method #1, you’re changing another variable: timing. Because you’re waiting an hour (or more) to send the winning email to the rest of your list, you’ll have no way of knowing if the timing affected the results.

Step 6: Decide What the Results Mean

Let’s say your A/B test results show that the subject line with the emoji in it received 10% more opens than the non-emoji subject line. From there, ask another question: What do the results mean?

The most obvious answer is that it means including an emoji in your subject line will increase open rates. But there might be other elements at play here. Does the type of emoji matter? Does the placement of the emoji (at the beginning of the subject line versus the end, for example) make a difference?

This is why it’s not enough to draw a conclusion from one split test. To make sure their conclusions hold true, good marketers are constantly re-testing. For example, in this case, you might send a second split test campaign with an emoji and non-emoji subject line and find that the results are completely different this time around. Alternatively, you might send a third split test that changes the placement of the emoji.

The more testing you do, the more you can glean reliable conclusions about the results.

Step 7: Implement the Lesson

After you’ve revisited your hypothesis, analyzed the results, and come to a conclusion, it’s time to apply that lesson to all of your future email marketing campaigns.

I recommend that you be very intentional about your split-testing campaigns. When you’re sending several marketing emails per month and trying to get better results fast, it’s easy to make a mental note of campaign results and think that that’s enough. The truth is, though, the numbers will get jumbled in your mind, and it can be hard to remember what you tested last time or what lesson you learned from it.

For this reason, it’s a great idea to keep a spreadsheet with your tests, results, and learnings. Yes, you can always revisit campaign analytics in your email marketing software, but you might not remember what your hypotheses and conclusions were.

Step 8: Keep on Testing!

Data-driven marketers are constantly testing and iterating. You should always be running split tests, and just because you’ve replicated the results in a couple of A/B testing campaigns doesn’t mean you no longer need to test that independent variable. Things can change, so you want to make sure that your email marketing strategy is staying up to date with the changing times and your customers’ evolving preferences.

To further optimize, decide on one of three options:

- Change the independent variable. So instead of testing the subject line, maybe it’s time to test the body copy of your email.

- Add another variation of the independent variable. If you’ve been testing between blue and red call-to-action buttons, try throwing in another color, like orange.

- Run the same test again. Maybe you’ve found that a single test showed that sending your email marketing campaign on Tuesday morning at 10:30 had the highest open rates. To make sure that’s not a fluke, send another email marketing campaign on another Tuesday at 10:30 a.m. to see if you can replicate the results.

As your sample size grows and you get more experienced with simple A/B testing, you might eventually graduate to multivariate testing.

What Software Do You Need to Run a Split Test?

There are two main software tools involved in A/B testing your emails. The most obvious one is the email marketing service that sends your emails. But don’t neglect the landing page that your email subscribers click through to; this element is really only relevant if you intend to focus on conversions, though. Things like open rate and click rate are independent of the landing page.

Below are just a few tools you can use for your email split testing. Pretty much every email marketing service has A/B testing capabilities of varying levels of sophistication. Click the names to read the help center tutorials on how to run a split test with that software.

Email Marketing Services

Landing Page Software

Get in the Lab and Start Experimenting With Email Split Testing!

If you want to start seeing real results from your email marketing—and in turn, more money coming in because of it—you need to be split testing. While you may be itching to get results fast and think you don’t have time to set up A/B tests and track them, the truth is, without split testing, you’re merely making uninformed guesses.

Running split tests is scientific, but it’s not rocket science. Let’s recap the steps:

- Ask a question

- Form a hypothesis

- Pick one independent variable

- Gather a large enough sample size

- Run the test

- Analyze the results

- Implement the lesson in future marketing emails

- Keep re-testing

Remember to keep a spreadsheet to track your findings. Once you’ve gotten the first few A/B tests under your belt, you’ll be hooked by how simple and effective they truly are. Stop guessing with your email marketing, and start thinking like a scientist!